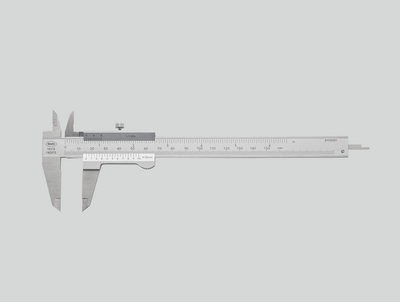

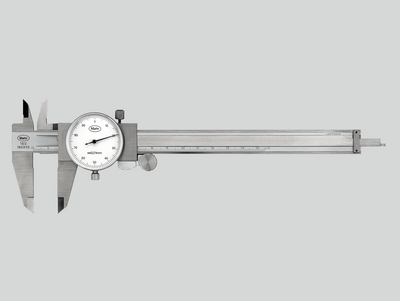

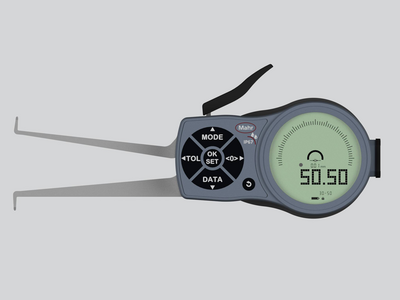

This is true for every hand tool or gage used in a manufacturing environment that verifies the quality of parts produced—from calipers and micrometers, to dial indicators and electronic amplifier systems measuring to sub-microns. This has always been necessary for maintaining quality, but there are also additional, external reasons to establish and maintain a regular program of gage calibration: customer requirements. It is now common that companies demand that suppliers document their quality efforts from start to finish.

Some large companies with thousands of hand measuring tools, dial/digital indicators and comparators can cost-justify hiring or training specialists in gage calibration methods and supplying them with equipment and resources to perform virtually all calibration duties in-house. However, dial and digital indicator or comparator calibration can be a very time consuming and operator intensive process.

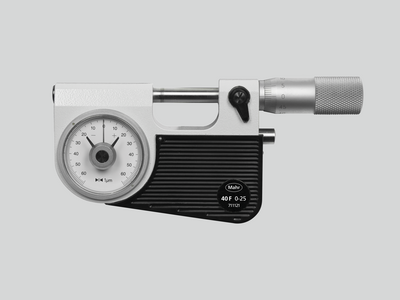

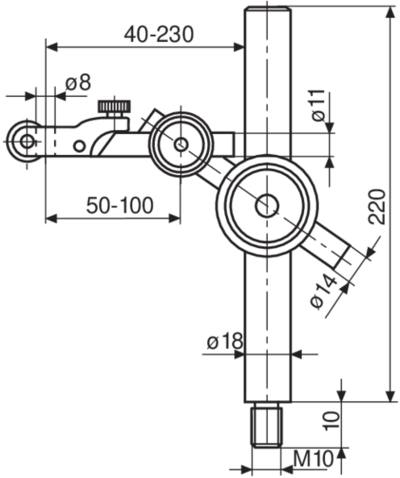

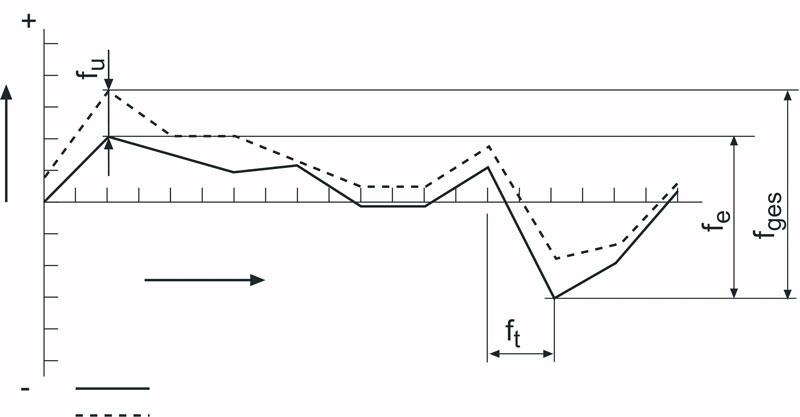

Most dial indicators are relatively short range but need to be checked at multiple points throughout their range to verify performance accuracy. They then need to be checked again in the reverse direction to verify hysteresis requirements. Historically most dial indicator calibrators have been built around a high precision mechanical micrometer—in effect turning the micrometer to a known point and then observing any deviation on the dial indicator. Even for a short-range indicator, the process will involve moving a mechanical dial calibrator by hand to 20 or more points along the indicators travel. This is not too difficult for a short-range indicator, but with a longer-range indicator, say 12.5, 25, 50 or even 100 mm of range, there are a lot of positions to go to and points to observe and record.

This can also take a significant amount of time and concentration by the user. Doing this for many indicators throughout the day is stressful for the operator not only in hand positioning of the micrometer head to hundreds if not thousands of points, but also the resulting eye strain from reading the micrometer head and the indicator. The reading is also problematic since people will naturally (unintentionally) reverse numbers or just misread. Alternatively, in the case of a dial indicator, not reading the indicator straight on causes a parallax effect and a misreading of the result.

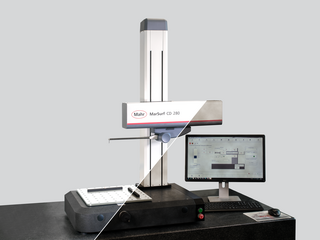

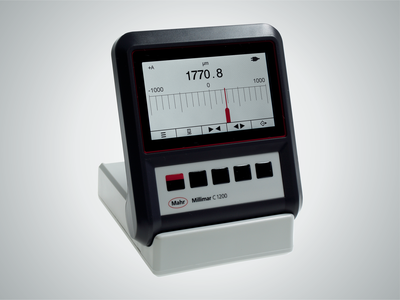

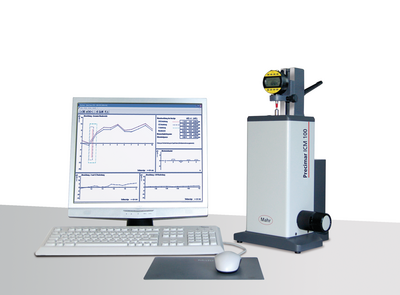

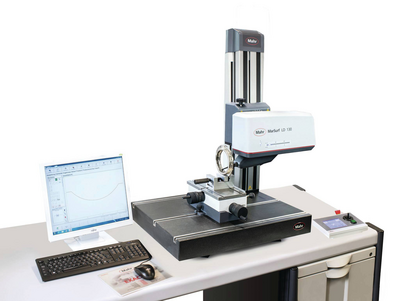

To reduce operator stress and increase productivity, automated calibrators are available that, based on the indicator, will drive a precision spindle to the desired location. The operator can then read and record the deviations. These machines will significantly reduce the hand/arm strain caused by the constant rotational driving of the mic head. Still time is involved, and the operator must read and record the indicator’s values. This really is a significant improvement. However, more can be done.

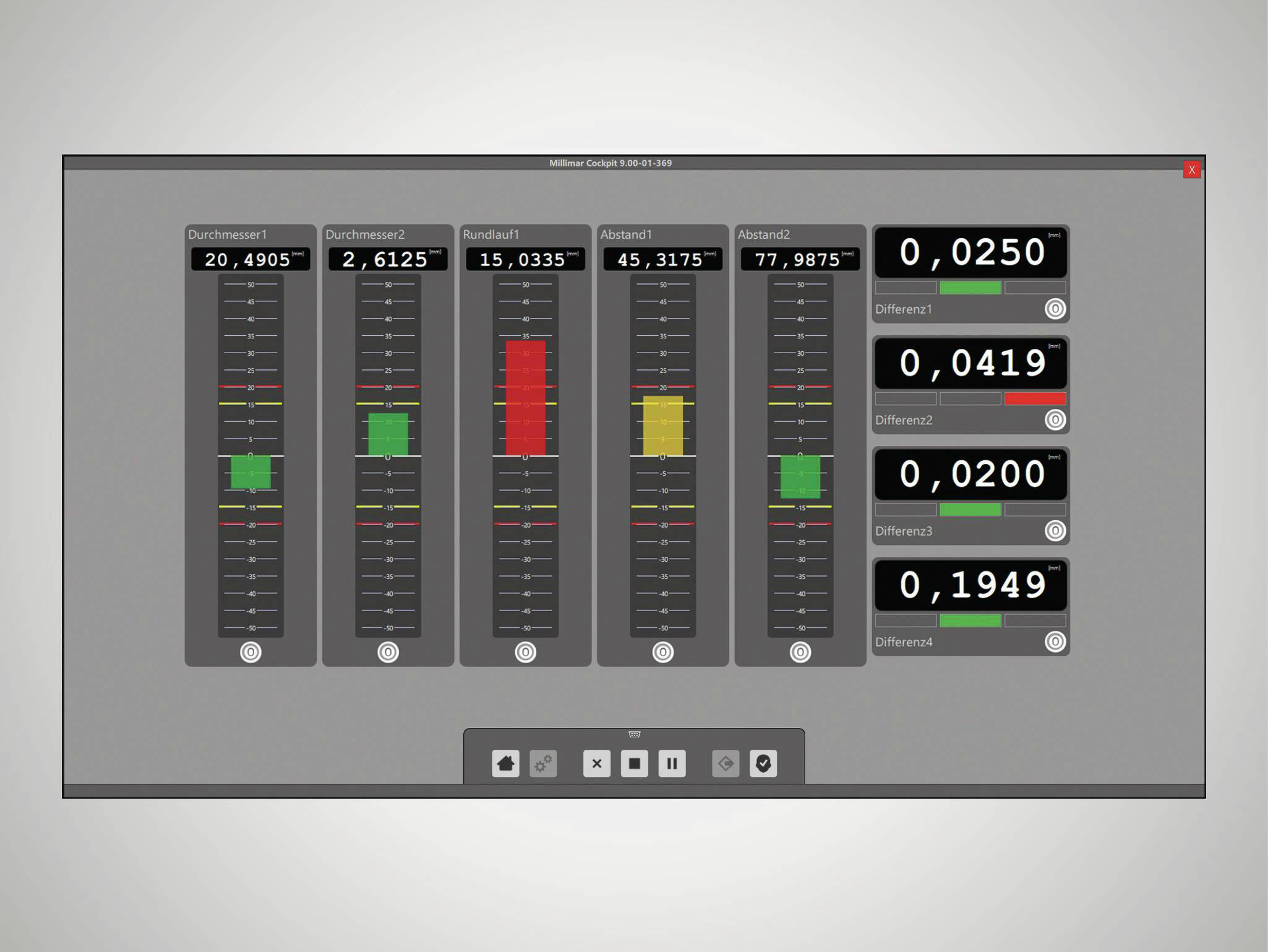

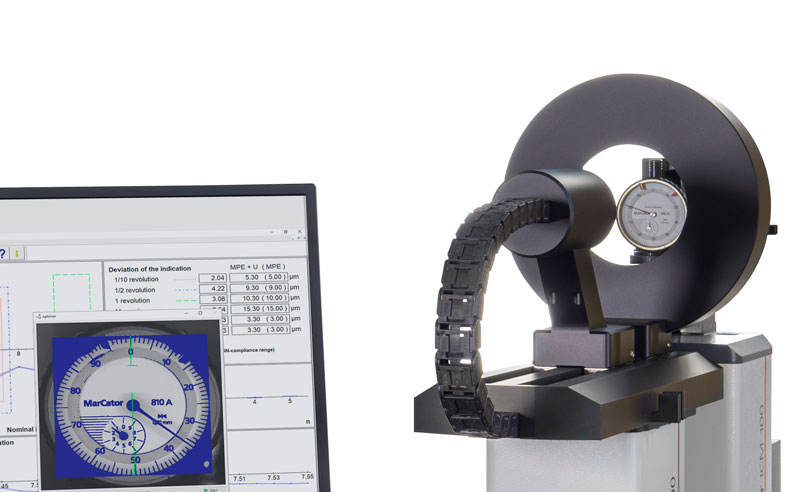

The real improvement would be to eliminate the operator, install the indicator into the calibration tool, tell the gage what indicator specs to measure for and then let the gage measure and certify the indicator without operator involvement. This allows the gage technician to be productive while the automated calibrator is working—preparing the next indictor to be checked, signing of the certifications or even starting a different calibration process.

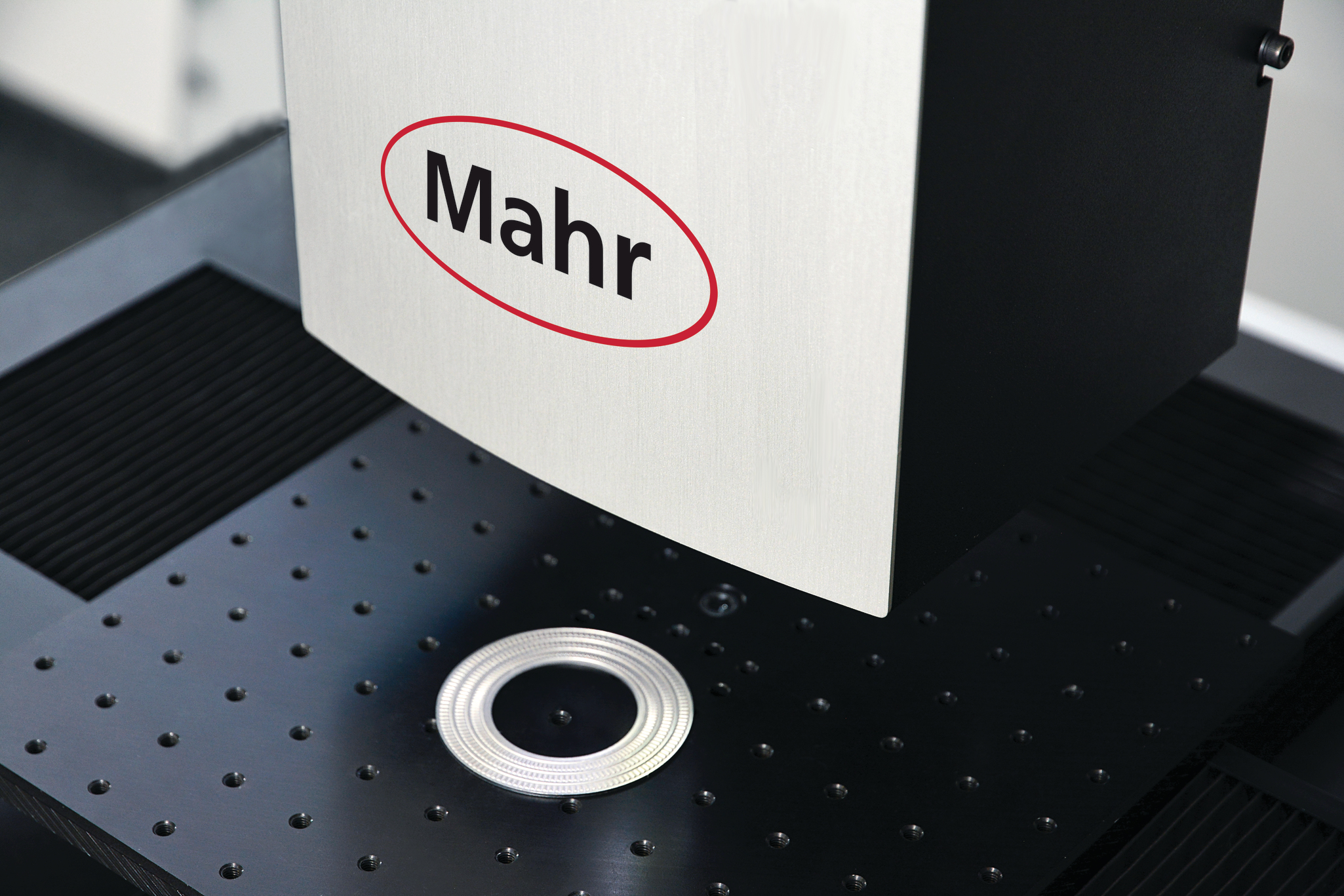

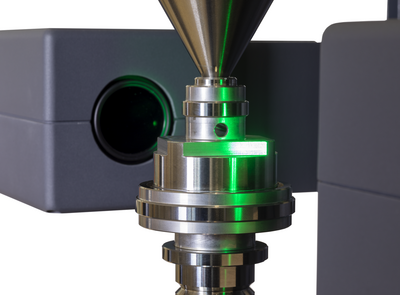

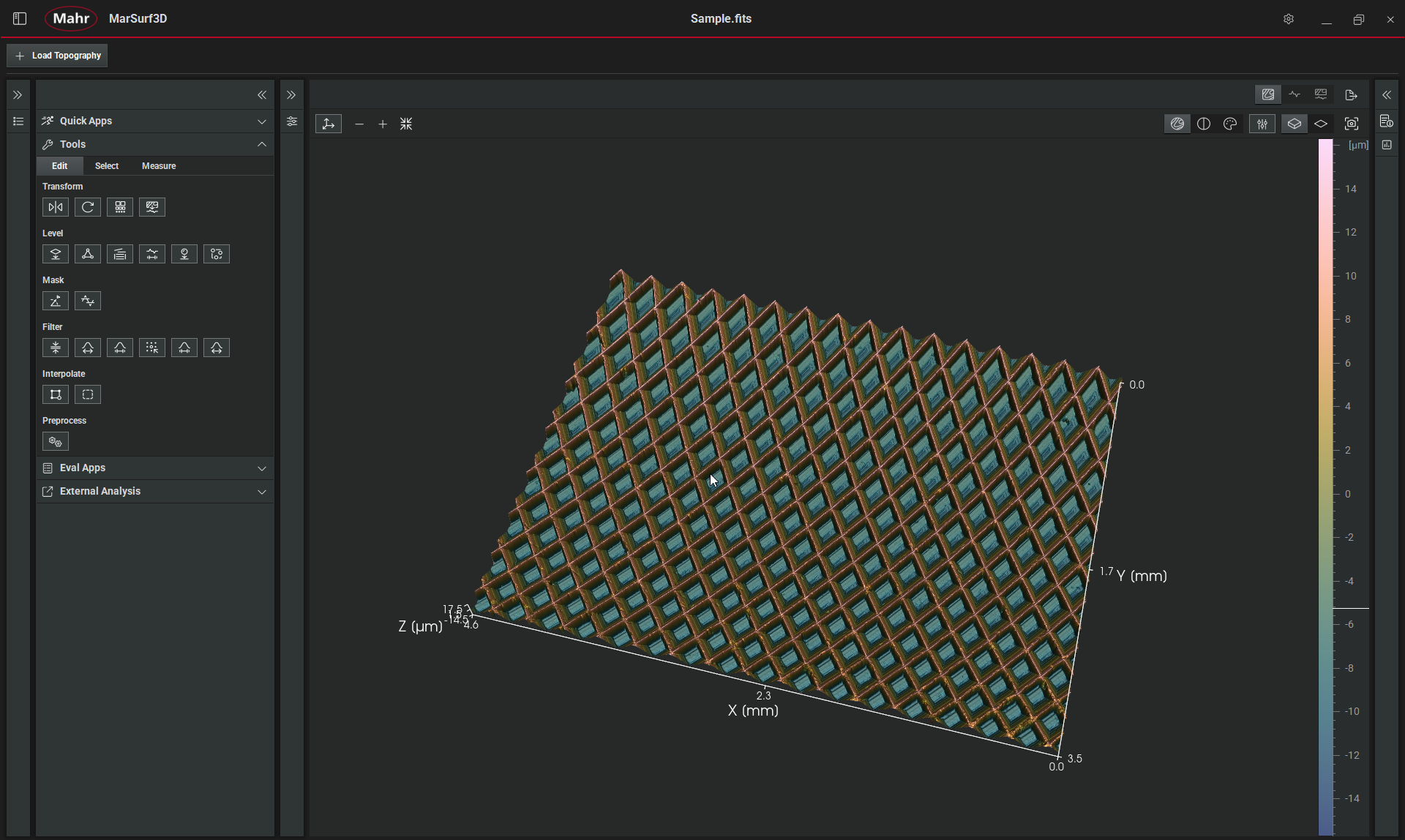

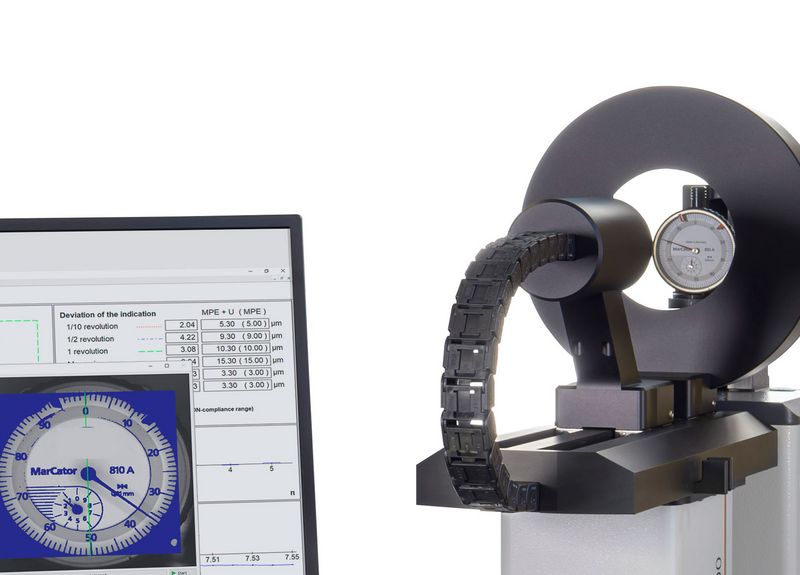

Today with modern vision systems, it is possible to “read” the dial or digital indicator or comparator. By reading, I can know what indicator and the dial is supposed to be and process an image to read the pointer relative to the grads and interpolate this as a measurement. In the case of digital indicators, the digital dial is scanned by the systems camera, the digits are analyzed/ “read” by the controller and the actual deviation between measurements is made.

Because of this automation with image processing, what was once a labor intensive, manual and high risk of error calibration is now faster and reduces measurement errors and uncertainties while preventing potential stress and injuries to the user. With the auto-recognition of the vison system, more test items with more data points will be recorded faster than conventional manual methods, along with freeing of the operator to be productive during the automated measuring process.